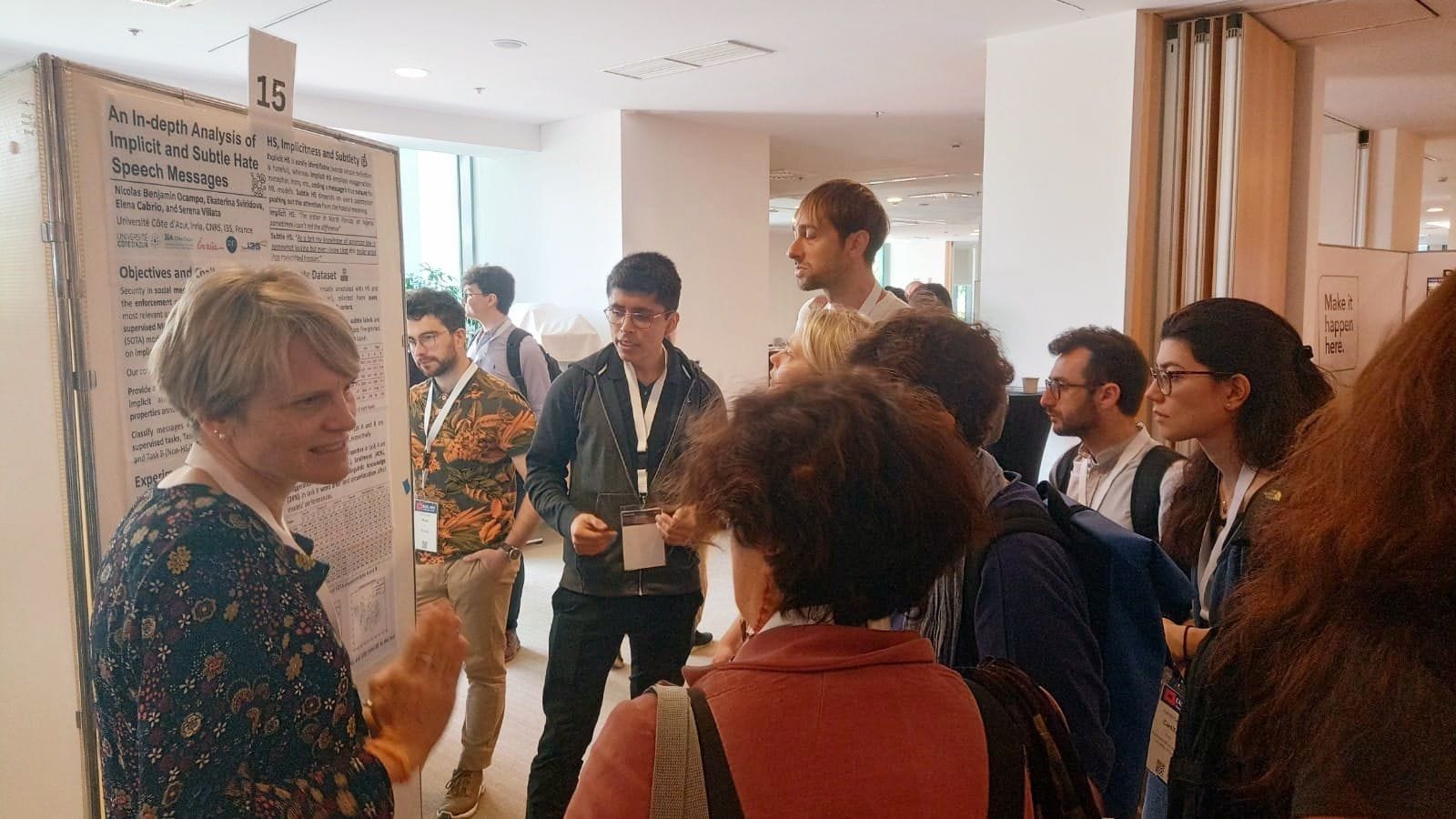

At the beginning of May, I participated in the EACL 2023 conference, where I presented my first publication during my Ph.D. entitled "An In-depth Analysis of Implicit and Subtle Hate Speech Messages". Here, I share my experience at one of the top conferences in NLP.

Volunteering

Volunteering at the conference was an excellent opportunity to connect with fellow Ph.D. students working in similar research areas. We had some obligations, such as check-in registration and helping with the plenary or poster sessions. Normally, the high workload is during the first registration day (where volunteers shine the most). Then, for shifts after the first day, sporadically, have people willing to register or need some guidance. I think it is a good chance to get to know other students working in similar domains. So, for me, applying for volunteer work is a must at any conference.

Venue and Organization

The choice of the conference venue, Valamar Lacroma Dubrovnik Hotel, for EACL 2023 struck a good balance between offering various amenities and ensuring attendees stayed engaged on-site during breaks. The overall organization of the event was excellent, with only a few minor changes made on the go. Additionally, the city of Dubrovnik itself was beautiful and small enough to explore its main attractions within one or two evenings.

Keynotes

I want to highlight one of the three invited talks given by Edward Grefenstette entitled "Going beyond the benefits of scale by reasoning about data", which focused on the effectiveness of Large Language Models (LLMs). He discussed how machine learning models needed only a few data samples reflecting a new behavior/application to output behavior like it. Therefore, in this direction, the approach of increasing the data size and model architecture remains promising as long as we provide new data/tasks to train our LLMs on. Additionally, he showed that LLMs are less affected by "recency bias", easy to optimize, hard to overfit, and commonly a single epoch (with maybe only a fraction of the data) is sufficient to train them.

Papers

I really liked papers oriented on ethical issues and responsible AI. This conference had much to offer in this direction, with many outstanding contributions that raised interesting discussions.

Below are some papers I could look through in detail:

Generation-Based Data Augmentation for Offensive Language Detection: Is It Worth It?

Camilla Casula and Sara Tonelli

They presented an evaluation of existing and novel data augmentation setups based on generative large language models for offensive language detection. They tested their approach in a cross-evaluation setting, showing that data augmentation's positive effect may not be consistent across multiple setups.

Why Don't You Do It Right? Analysing Annotators' Disagreement in Subjective Tasks

Marta Sandri, Elisa Leonardelli, Sara Tonelli, and Elisabetta Jezek

They study multiple types of disagreement (taxonomies) when annotating subjective tasks in order to observe the relation between disagreements and the performance of classifiers trained on that annotated data. (Conclusion: high disagreement => harder to classify)

Don't Blame the Annotator: Bias Already Starts in the Annotation Instructions

Mihir Parmar, Swaroop Mishra, Mor Geva, and Chitta Baral

The same conclusion was reached above, but here, the authors focused on identifying biases in annotation guidelines (they called it instruction bias) that can lead to overestimation of model performance.

In-Depth Look at Word Filling Societal Bias Measures

Matúš Pikuliak, Ivana Beňová, and Viktor Bachratý

They explore word-filling techniques to measure bias in Language Models, showing their current limitations.

Nationality Bias in Text Generation

Pranav Narayanan Venkit, Sanjana Gautam, Ruchi Panchanadikar, Ting-Hao Huang, and Shomir Wilson

A study on how GPT2 presents positive and negative biases to certain nationalities based on internet usage and economic status. They also present a debiasing approach using Adversarial Triggers.

Nuno M. Guerreiro, Elena Voita, and André Martins

They present how machine translation models can hallucinate content, which I didn't expect to happen in such a task as it seems pretty straightforward.

There are way more articles that seemed exciting and are on my TODO list to read. I encourage you to take a look!